Understanding the Foundation of Job Aggregation Through API Scraping

In today’s rapidly evolving employment landscape, job aggregators have become indispensable platforms that consolidate opportunities from multiple sources into unified, searchable databases. The backbone of these sophisticated systems relies heavily on API scraping techniques that efficiently collect, process, and organize vast amounts of job-related data from diverse employment platforms, corporate websites, and recruitment agencies.

The process of scraping APIs for job aggregators involves systematic data extraction methodologies that enable platforms to maintain comprehensive, up-to-date listings while providing users with seamless access to thousands of employment opportunities across various industries, locations, and skill levels. This intricate process requires careful consideration of technical implementation, legal compliance, and data quality management.

The Technical Architecture Behind Effective API Scraping

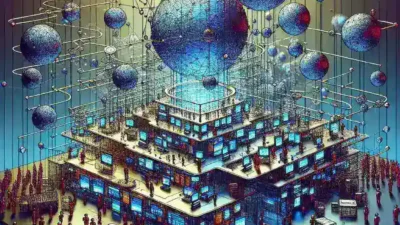

Modern job aggregators employ sophisticated scraping architectures that utilize multiple data collection strategies simultaneously. These systems typically incorporate REST API endpoints, GraphQL queries, and traditional web scraping techniques to gather information from target platforms. The technical implementation involves creating robust data pipelines that can handle varying response formats, rate limiting constraints, and authentication requirements across different job boards and recruitment platforms.

Successful API scraping operations require careful attention to request frequency management, data parsing algorithms, and error handling mechanisms. Developers must implement intelligent retry logic, proxy rotation systems, and comprehensive monitoring tools to ensure consistent data collection while respecting the terms of service of target platforms. The architecture should also include data deduplication processes, content normalization procedures, and quality assurance protocols to maintain database integrity.

Strategic Approaches to Multi-Platform Data Integration

Job aggregators must navigate the complex landscape of integrating data from numerous sources, each with unique API structures, authentication methods, and data formats. This challenge requires developing flexible integration frameworks that can adapt to various platform specifications while maintaining consistent data quality standards. Integration strategies often involve creating standardized data schemas that can accommodate information from diverse sources without losing critical details or context.

The most effective approaches include implementing modular scraping components that can be easily configured for different platforms, establishing robust data validation processes, and creating comprehensive mapping systems that translate platform-specific fields into standardized formats. These systems must also handle real-time updates, manage data conflicts between sources, and maintain historical records for trend analysis and reporting purposes.

Navigating Legal and Ethical Considerations

The legal landscape surrounding API scraping for job aggregation involves complex considerations that vary significantly across jurisdictions and platforms. Operators must carefully review terms of service agreements, respect robots.txt directives, and implement appropriate rate limiting to avoid overwhelming target systems. Ethical scraping practices include obtaining proper permissions when required, respecting intellectual property rights, and ensuring that data collection activities do not negatively impact the performance of source platforms.

Many successful job aggregators establish formal partnerships with major job boards and recruitment platforms, creating mutually beneficial relationships that provide reliable data access while respecting platform policies. These partnerships often involve revenue sharing arrangements, branded integration opportunities, and collaborative development of specialized APIs designed specifically for aggregation purposes.

Advanced Data Processing and Quality Management

Maintaining high-quality job listings requires sophisticated data processing pipelines that can identify and eliminate duplicate postings, detect fraudulent or misleading listings, and ensure that all collected information remains current and accurate. Quality management systems typically employ machine learning algorithms to classify job types, extract relevant skills and requirements, and standardize location and salary information across different formats and conventions.

These systems must also handle the challenge of maintaining data freshness, as job postings have limited lifecycles and platforms frequently update or remove listings. Effective quality management includes implementing automated content verification processes, establishing feedback loops for user-reported issues, and creating comprehensive audit trails for compliance and troubleshooting purposes.

Optimizing Performance and Scalability

As job aggregators grow and expand their coverage, they must address significant scalability challenges related to data volume, processing speed, and system reliability. Performance optimization strategies include implementing distributed scraping architectures, utilizing cloud-based infrastructure for elastic scaling, and developing efficient caching mechanisms to reduce redundant API calls and improve response times.

Modern job aggregators often employ microservices architectures that allow independent scaling of different system components based on demand patterns. This approach enables platforms to allocate resources efficiently, maintain high availability during peak usage periods, and implement targeted optimizations for specific data sources or geographic regions.

Emerging Technologies and Future Trends

The field of API scraping for job aggregation continues to evolve with advances in artificial intelligence, machine learning, and data processing technologies. Emerging trends include the use of natural language processing for improved job description analysis, computer vision techniques for extracting information from image-based job postings, and predictive analytics for identifying trending skills and market demands.

Future developments may include more sophisticated automation capabilities, enhanced real-time processing systems, and improved integration with social media platforms and professional networks. These advances will likely enable job aggregators to provide more personalized recommendations, better matching algorithms, and comprehensive career guidance services.

Implementation Best Practices and Common Pitfalls

Successful implementation of API scraping for job aggregators requires careful planning, robust testing procedures, and ongoing maintenance protocols. Best practices include establishing comprehensive monitoring systems, implementing graceful degradation strategies for handling API failures, and maintaining detailed documentation for all scraping processes and data transformation procedures.

Common pitfalls include inadequate error handling, insufficient rate limiting, poor data validation processes, and failure to adapt to changes in target platform APIs. Successful operators invest in continuous monitoring, regular system updates, and proactive communication with data source platforms to maintain reliable operations and positive relationships.

Building Competitive Advantages Through Strategic Data Collection

Leading job aggregators differentiate themselves through strategic approaches to data collection that go beyond basic job posting aggregation. These platforms often collect additional context information such as company reviews, salary benchmarks, industry trends, and skill demand analytics. Strategic data collection enables platforms to provide enhanced user experiences, valuable market insights, and comprehensive career guidance services that extend far beyond simple job search functionality.

The most successful platforms invest in developing proprietary data sources, establishing exclusive partnerships, and creating unique value propositions that attract both job seekers and employers. This approach requires sophisticated data analysis capabilities, comprehensive market research, and ongoing innovation in service offerings and user experience design.

Conclusion: The Future of Job Aggregation Through Intelligent API Scraping

The landscape of job aggregation continues to evolve as technology advances and market demands shift toward more sophisticated, personalized employment services. Successful platforms will be those that can effectively balance technical innovation with ethical considerations, legal compliance, and user value creation. The future of job aggregation lies in developing intelligent systems that not only collect and organize job data but also provide meaningful insights, career guidance, and market intelligence that benefit all stakeholders in the employment ecosystem.

As the industry matures, we can expect to see continued innovation in API scraping technologies, more sophisticated data processing capabilities, and enhanced integration between job aggregation platforms and other career-related services. The organizations that invest in building robust, scalable, and ethically responsible scraping systems will be best positioned to thrive in this competitive and rapidly evolving market.